Spelling sucks. “Though” rhymes with “go”. “Thought” rhymes with “got”. “Through” rhymes with… “true”, “grew”, “who”, “lieu”, “you”, “flu”, “moo”, “shoe”, “coup”, “queue”, and “view”. Enough examples, let’s fix this madness!

Here’s a hypothetical procedure to reform English spelling: for every word, it has some IPA pronunciation. For instance, “through” is pronounced “θɹu”. Now let’s replace every letter in the IPA pronunciations with strings of one or more letters, such as mapping all occurrences of “θ” to “th”, “ɹ” to “r” and “u” to “oo”. Of course, we don’t want the new phonetics-based spellings to get too crazy, so we should minimize the total number of changes between the old and new spellings. Let’s define the “amount of change” between two spellings as the minimum number of insertions, deletions, and single-character replacements, which is technically called the Levenshtein edit distance. In our little example, the edit distance between “through” and “throo” is 3, because we have to replace the “u” with an “o” and delete the last two characters. Lastly, we should make this sum of edit distances weighted by the frequency of each word in typical English, since people care a lot more if the spelling of “through” changes versus the spelling of “queue”.

Let’s do another example to understand the problem. Imagine some random farm with perfectly cylindrical Belted Galloway cows (AKA oreo cows). The cows have a language with exactly three words:

through θɹu 0.01

moo mu 0.98

cow kaʊ 0.01

The first word of each input line is the old spelling, the second word is the IPA pronunciation, and the third is the word’s frequency. Anyways, just like me, the cows think English spelling sucks, so they’re embarking on a spelling reform journey. Since the word “moo” is very common, tells means we really want to keep the new spelling as close as possible to the old spelling. Keeping that in mind, here’s the solution:

θ th

ɹ r

u oo

m m

k c

a o

ʊ w

The new spellings are “throo”, “moo”, and “cow”. Since “moo” and “cow” are unchanged, the total edit distance sum is 3*0.01 which is 0.03. Hooray!

Note that in this problem, we don’t need the pronunciations to be in IPA. The old spelling and pronunciation are allowed to be arbitrary strings.

My name is Hard, NP-Hard

If you try solving some wackier instances of this problem (inputs for this problem), you’ll soon realize that this spelling reform problem seems hard. For the cow example, the solution was pretty obvious. But if the old spellings and pronunciations aren’t closely related, then we might have to brute-force through a ton of combinations. Also, mapping a phonetic letter to some letter to keep the spelling the same for one word can mess up a whole bunch of other words. And edit distance isn’t the nicest thing to work with either.

But how hard really is this problem? You might have heard of NP-hard before, but what does that even mean? It’s actually pretty simple, but first, we need to do a few definitions. They’ll be nice, easy definitions, I promise. This is just to ensure that our sentences don’t devolve into vague nonsense like “you make the thing into the other thing”.

A decision problem is a problem which has a yes or no answer. A simple example would be the problem of determining whether a string has an “a” in it or not. Our spelling reform problem is sadly not a decision problem, but it can be turned into one easily by asking if this language has a spelling reform that makes the total edit distance sum less than some value K. Note that this decision version of spelling reform is easier, since if we can solve the original minimization problem, we can obviously solve the decision problem, but not vice versa. But as we’ll see soon, even the decision problem is hard.

NP is the class of all decision problems that can be checked (not solved!) in polynomial time. (NP technically stands for nondeterministic polynomial time, but nondeterminism is weirdly magical so we won’t delve into it. Also, I’m just going to assume you know what polynomial time is, since otherwise you’re about to have a rough time reading onward.) For example, our spelling reform decision problem is in NP, since given the actual assignments of the pronunciation letters to letters in the new spelling, we can construct all the new spellings, compute the edit distances, and then check if the edit distance sum is less than K or not. This can all be done efficiently in polynomial time. However, we don’t know if there is an efficient way to actually solve the problem from scratch. You can easily verify a solution to some instance of an NP problem, but finding the solution itself doesn’t necessarily have to be easy.

Yay, we’re finally ready to meet our new best friend, NP-hardness. A problem is NP-hard if it’s harder than all problems in NP, or if it’s one of the hardest problems in NP (in this case it’s called NP-complete). Basically, if we have a magic black box (technically called an oracle) that can magically solve our NP-hard problem, then we can devise polynomial time algorithms that use this black box to solve any problem in NP.

Reductions

So is our spelling reform decision problem really that hard? Can we really solve all NP problems using spelling reform?

The answer is yes, and we will prove this by showing our problem is even harder than an NP-hard problem called 3SAT. Because if our problem is even harder than an NP-hard problem, it must be really, really hard, right? Well, time for more fun definitions!

SAT (short for satisfiability) is a problem where we’re given a boolean expression and we want to know if there’s some way to set the variables to make the expression true or not. For instance, say we’re given (!a || (b && c)) && (!b || c || !d). The answer to this particular instance is yes, because we can set all the variables to false which makes the whole thing true. 3SAT is a variant where the boolean expression must be the && of a bunch of clauses. Each clause consists of the || of at most 3 different variables, which can be possibly negated. The previous example is not in this format so it wouldn’t be a valid 3SAT expression, but something like (!a || b) && (!b || c || !d) would be fair game. SAT and 3SAT are both NP-hard, which we won’t prove (the proof for SAT is really cool though).

We will now do a reduction from 3SAT to our spelling problem. This means that we will create a polynomial time procedure where given a 3SAT instance, we will convert it to a spelling instance. Then, we will run some magic spelling problem solver black box thingy which magically gives us a solution to the spelling instance. Then, we’ll convert that solution back into a 3SAT solution. We’re basically showing that any 3SAT instance can be turned into an equivalent spelling instance, so 3SAT is kind of a subset of the spelling problem, so our problem must be even harder!

Constructing the reduction

Alright, so where to start? Is our spelling problem even that 3SAT-y? Let’s try to convert a specific 3SAT instance (!a || b) && (!b || c || !d) into a spelling reform problem.

Let’s start with boolean variables. Naturally, we should make the phonetic letters the boolean variables, since the phonetic letters are the things we’re choosing assignments for. For example, the variables a, b, c, d in the 3SAT instance become the phonetic letters a, b, c, d, and we can choose to map each letter to the uppercase or lowercase version to “set that variable to true or false”. The uppercase version will represent false and the lowercase version will represent true. However, the spelling reform solution could be naughty and map a phonetic letter to some other random letter or to a longer string of letters, which would be bad. Fortunately, we can force the solution to do our intended behavior by adding some heavily weighted words:

a a 10000

A a 10000

b b 10000

B b 10000

c c 10000

C c 10000

d d 10000

D d 10000

If the solution maps each phonetic letter to the uppercase or lowercase version, then the edit distance sum will be 40000. Otherwise, if they try something else, their sum will be much worse.

For the clauses, we would like a clause to be true if any one of the three variables inside is true since that’s how || works, so we’d like a word to have a low edit distance if the letters of it are “true”. I thought about this for a long time last night while trying to fall asleep and here’s what I came up with:

##Ab## ba 1

##BcD## dcb 1

For the old spellings, we’ll write down the 3SAT clauses as words, converting negated variables to uppercase letters, and then pad with ## on both sides. For the phonetic pronunciations, we’ll write the variables in lowercase in reverse. What the heck is going on?

Imagine a simple clause a || b || c. This corresponds to:

##abc## cba 1

If the solution sets all the variables to false, then we get the old and new spellings:

##abc##

CBA

If a is set to true:

##abc##

CBa

If b is set to true:

##abc##

CbA

If both a and b are set to true:

##abc##

Cba

Magic! Well, it’s not really magic after you work through all the cases. Basically, if none of the variables are true, then the edit distances is 7, and if at least one is true, then it drops down to 6. The reversed order of the letters in the phonetic pronunciation is to ensure that setting multiple variables to true does not make the edit distance even smaller. If the order wasn’t reversed, then that last example would look like this:

##abc##

abC

The padding on both sides is to keep the edit distance at 7 normally. If we didn’t have it, then setting all the variables to false would cause the edit distance to be 3:

abc

CBA

Overall, we basically have a bunch of heavily weighted words to force our choices into only choosing uppercase or lowercase for the variables, and then a clever clause construction that has two possible edit distances, depending on whether at least one variable is true or not. Finally, in 3SAT we want all the clauses to be true, so that corresponds to setting a value K in our decision problem so you can only reach K if you make all the clauses 6 instead of 7. That’s all! If our decision problem has a solution, then the 3SAT instance must have a solution, and if it doesn’t, then the 3SAT instance doesn’t have one either. Yay! Thus, spelling reform is harder than 3SAT, so it’s NP-hard! (It’s also NP-complete since this decision problem is in NP as mentioned earlier.)

Why should we care?

Alright, so we just proved that spelling reform is NP-hard. So what?

There’s a famous open problem in computer science called the P versus NP problem (with a one million dollar prize!). We haven’t defined P yet, but it’s simply the class of all decision problems that can be solved in polynomial time. Easy as that. The question is whether or not P is the same class as NP. Obviously, P is inside NP, because we can easily check a solution by ignoring the solution that’s being offered to us and just finding a solution ourselves. However, no one knows yet if problems that are easy to check are also easy to solve. If this is true and P equals NP, then a ton of hard problems are actually easy and solvable in polynomial time. This is pretty crazy, since NP contains some famous hard problems like the Traveling Salesman Problem and factoring that people have spent mounds of effort trying and failing to come up with polynomial time algorithms for. But it would take finding a polynomial time algorithm to just one NP-complete problem to prove that P equals NP, since if one of the hardest problems in NP is solvable in polynomial time, then they all are. Most people believe that P does not equal NP because of the crazy consequences of P equals NP, but there are some notable people who disagree.

OK, that’s some fascinating theoretical CS, but why should we care? Well, taking the common view that P does not equal NP, that means that NP-hard problems do not have fast, polynomial time algorithms. We can’t possibly solve them efficiently. Once we prove a problem is NP-hard, then we can shift from searching for efficient exact algorithms to finding heuristics, or approximation algorithms, or algorithms that perform well for typical or random inputs but struggle with some specific contrived inputs that you’ll never see in practice. Or maybe you should try to parallelize a slow brute-force algorithm or acquire more hardware. Or maybe it’s even a sign that you should change or simplify the problem! Also, if you spend all day thinking about finding algorithms for solving a problem quickly, then pondering how to prove a problem hard can give you an interesting new perspective on the problem. And NP-hard problems are dirt-common, so you’ll definitely run into them at some point.

Interestingly, a lot of NP-hard problems are actually still pretty feasible to solve in practice. For instance, there are lots of highly optimized SAT solvers that use various heuristics to perform well in practice. For our spelling reform problem, it would probably be not difficult to solve it for the entire English language, since even though there are thousands of English words, most of the consonant should just stay the same. There’s no reason to change “b” into anything other than a “b”. In addition, a lot of real-world inputs are also fairly small. For instance, my Installing Every Arch Package post includes an NP-hard problem, maximum independent set, and the size of the graph is 12000 nodes! And I brute-forced the solution! What? Well, it turns out the graph doesn’t have large connected components, so we can brute-force each connected component separately.

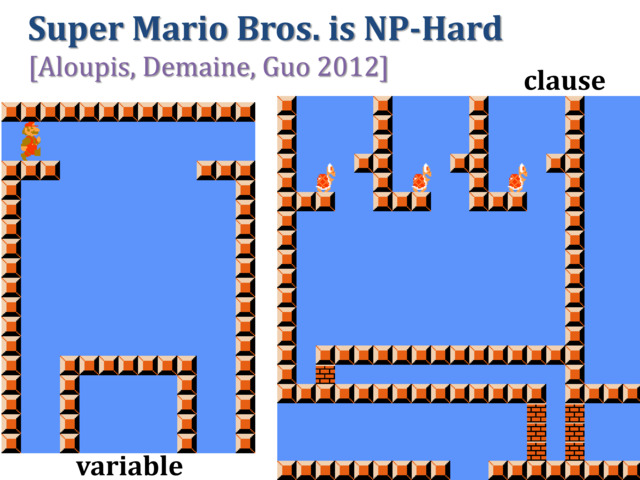

And lastly, you should care about NP-hardness because it’s just plain fun. When the original Super Mario Bros. was proved NP-hard, there were whole news articles written about it. What’s really cool is that the proof is actually quite similar to the one we did above. Most NP-hardness proofs have a similar style, just often the constructions are a lot more complicated. Usually when we prove something NP-hard, we reduce from some NP-hard problem that is already similar to our problem, so we aren’t limited to just reducing from 3SAT. Mario though, turns out to be quite similar in some sense to 3SAT, so check out the proof! It’s fun!